Towards the ending of this semester, we're covering one of the last topics: software, one of the most essential parts in a computer, since it is what makes the computer unique to individual users.

Flowchart Presentation

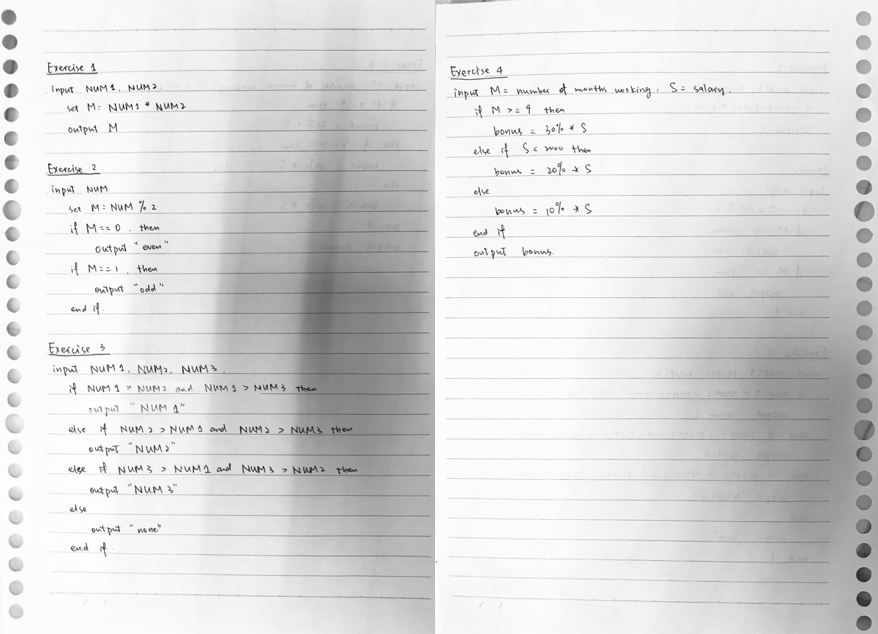

The week began with something left over from last week – the presentation of flowchart. We were assigned, in pairs, to discuss a topic we’re interested in and solve it in the form of flowchart and pseudocode, which we needed to present to the class.

After a series of discussion, turning down lots of possible topics, my partner and I eventually settled on “should you raise a cat”. This may not be so entertaining but we believe that it’s quite important and life-related since lots of us currently are or are considering raising a pet, which can be a really serious and deliberate life-decision.

To solve this problem, we utilized the idea of COMPUTATIONAL THINKING. So firstly, decomposition. We decomposed this problem into several questions: whether you have the time, money and energy to raise a cat, whether your physical and mental health conditions are suitable… So on and so forth. Secondly, abstraction. Focusing on individual questions, we used if-else conditions and thought about what would happen when the answer is “yes” and “no” respectively. And then, pattern recognition. In this case, the pattern would probably be recognizing how to deal with the “yes”s and “no”s in each question. Usually, when the answer is “yes”, in other words, the condition is suitable for raising a cat, the flowchart goes on to the next question, evaluating whether the user should raise a cat from another aspect; when the answer is “no”, however, we would then think if the unfavorable condition can be made up, so the flowchart either goes on to the makeup question or output “you shouldn’t raise a cat” directly and ends. Finally, algorithm. We listed the steps of judging whether to keep a cat and composed a flowchart and its corresponding pseudocode, as shown in the picture below.

Software

Hierarchy of Software

Recall what was learned at the beginning of the semester — layers of a computing system — the operating system layer and the application layer can be combined to form the software layer. This idea comes from the hierarchy of software, as shown in the chart below.

Softwares can be divided into two categories: system softwares and application softwares. Further, system softwares can be classified into operating systems, library programs, utility programs, and programming language translators; while application softwares are composed of general-purpose application software, special-purpose application softwares, and bespoke application softwares, all of which I’ll elaborate on in the rest of the blogpost.

Video: Computer Software

In order to better understand the role of computer software, we watched a video clip which explains it in plain English. The video compares a computer software to a translator between users and the computer, understanding our needs and put the computer to work for us. Most computer softwares have two basic kinds: operating systems and software programs.

The operating systems come with the new computer and do a lot of the same things. Mac, Windows, Linux are all daily examples of operating systems. They cover the basics, like saving a file and using a mouse.

However, to make a computer more useful and personalized, software programs are added. Essentially, a software program is just a set of instructions that tell the computer what to do. All in all, it’s the combination of the operating systems and software programs that “brings computers alive” and makes computers so useful. While the video clip teaches us the basics about computer softwares in plain English, it’s still necessary for us to learn the keywords and actual terminology.

System Software

The presence of system softwares are essential to both hardwares and application softwares, since they are designed to operate the computer hardware and to provide a platform for running application software. Four main types of system softwares exist: operating system software, utility programs, library programs, and translator software.

Operating System Software

Operating systems are a collection of programs that make the computer conveniently available to the user. One obvious feature of an operating system is that it hides the complexities of the computer’s operation. It is an interface between the application software and computer, in other words, an operation system interprets commands from application softwares; an application software has to reach for the operating system before it communicates with the computer.

Library Program

Library programs are commonly known for creating games. A library program is a collection of compiled routines or functions that other programs can use. It contains code and data that provide services to other programs such as interface, printing, network cade, and the graphic engines of computer games.

Utility Software

Utility programs perform a very specific task related to working with computers. These type of programs are usually small, with limited capacity, yet extremely powerful. Unlike application softwares, utility programs perform specific tasks not for the user’s personalized need but for the computer system to run smoothly.

When talking about utility softwares, we had a little activity: discussing several utility softwares we’ve used before. An example Judy and I came up with was the firewall program. Some other examples from other students include virus scanners and file managers.

Translator Software

Translator Software is a software that allows new programs to be written and run on computers by converting source code into machine code. There are mainly three types of translator softwares: assembler, compiler, and interpreter.

Assembler is a program that translates an assembly language program into machine code (‘0’s and ‘1’s). Assembly language is a type of mnemonic code. For example, ‘load register into X’ is ‘LDA X’ in assembly language, where ‘LD’ is short for ‘load’. Assembly language is one of the low-level programming languages, and the machine language can be considered as the lowest-level programming language.

Compiler is a program that takes a program in a high-level language, the source code, and translates it into object code all at once. Most applications on phone and Microsoft word are all examples of compiler. The basic process that a compiler works is to first pile up the entire program and translate all at once, which can be pretty slow, but the rest of the work is very fast, the computer execute the instructions at once.

Interpreter analyses and executes each line of a high-level language program, one line at a time. The program has to be interpreted each time it is run as no object code is generated. For example, websites are examples of interpreters. An interpreter is always between the user and the computer, translating the instructions and pass them to the computer to execute.

A video we watched compared compiler and interpreter. The most obvious differences between interpreter and compiler are that interpreter runs slowly but starts right away, whereas compiler needs extra preparation but runs quickly and efficiently. In fact, there are some softwares that are a combination of compiler and interpreter, for example, Java.

Application Software

An application software allows users to perform non-computer tasks. In other words, it is a software designed to help the user to perform specific tasks, it makes computers personalized. There are generally three types of application softwares: general purpose application software, special purpose application software, and bespoke application software.

General-Purpose Application Software

General-purpose software is a type of software that can be used for many different tasks, not limited to one particular function. Word processors, spreadsheet, and presentation softwares are all examples of generic software, like presentation softwares can not only be used to create presentations, but also videos, and other things as well.

Special-Purpose Application Software

This is a type of software that created to execute one specific task, a very narrow and focused task. Web browsers, calculators, media players, and calendar programs are common examples of special-purpose application softwares since they can only perform one specific task respectively.

Bespoke Software

Bespoke software is tailor made, or custom-made, for a specific user and purpose. It is made exclusively for an individual or organization, for example, software for the military, missile/UAV operations, software for hospitals and medical equipment, and software being written inside banks and other financial institutions. Bespoke softwares are totally different from off-the-shelf softwares, which are usually readymades, rather than tailor-made.

Through this week, we dug into the field of software, another essential component of computing system. The more we learn, the more the importance of computer and computational thinking starts to appear — computational thinking is something that can probably help us all along, even out of the CS class.